You install that new Parkour game that everyone’s talking about, and instantly your avatar gains a new set of skills. After a few minutes in the tutorial level, running up walls and vaulting over obstacles, you’re ready for a bigger challenge. You teleport yourself into one of your favorite games, Grand Theft Auto: Metaverse, following a course set up by another player, and you’re soon rolling across car hoods, and jumping from rooftop to rooftop. Wait a minute… what’s that glow coming from under that mailbox? A mega-evolved Charizard! You pull up a Poké Ball from your inventory, capture it, and continue on your way…

This gameplay scenario couldn’t happen today, but in my view, it will in our future. I believe the concepts of composability—recycling, reusing, and recombining basic building blocks—and interoperability—having components of one game work within another—are coming to games, and they will revolutionize how games are built and played.

Game developers will build faster because they won’t have to start from scratch each time. Able to try new things and take new risks, they’ll build more creatively. And there will be more of them, since the barrier to entry will be lower. The very nature of what it means to be a game will expand to include these new “meta experiences,” like the aforementioned example, that play out across and within other games.

Any discussion of “meta experiences,” of course, also invites discourse around another much-talked-about idea: the metaverse. Indeed, many see the metaverse as an elaborate game, but its potential is much higher. Ultimately, the metaverse represents the whole of how we humans interact and communicate with each other online in the future to come. And in my view, it’s game creators, building on top of game technologies and following game production processes, that will be the key to unlocking the potential of the metaverse.

Why game creators? No other industry has as much experience building massive online worlds, in which hundreds of thousands (and sometimes tens of millions!) of online participants engage with each other—often simultaneously. Already, modern games are about much more than just “play” – they’re just as much about “trade,” “craft,” “stream,” or “buy.” The metaverse adds yet more verbs—think “work” or “love”—to that list. And just as microservices and cloud computing unlocked a wave of innovation in the tech industry, I believe the next generation of game technologies will usher in a new generation of innovation and creativity in gaming.

This is already happening in limited ways. Many games now support user-generated content (UGC), which allows players to build their own extensions to existing games. Some games, like Roblox and Fortnite, are so extensible that they already call themselves metaverses. But the current generation of game technologies, still largely built for single-player games, will only get us so far.

This revolution is going to require innovations across the entire technology stack, from production pipelines and creative tools, to game engines and multiplayer networking, to analytics and live services.

This piece outlines my vision for the stages of change coming to games, and then breaks down the new areas of innovation needed to kickstart this new era.

The Coming Evolution of Games

For a long time, games were primarily monolithic, fixed experiences. Developers would build them, ship them, and then start building a sequel. Players would buy them, play them, then move on once they had exhausted the content—often in as little as 10-20 hours of gameplay.

We’re now in the era of Games-as-a-Service, whereby developers continuously update their games post-launch. Many of these games also feature metaverse-adjacent UGC like virtual concerts and educational content. Roblox and Minecraft even feature marketplaces where player-creators can get paid for their work.

Critically, however, these games are still (purposefully) walled off from one another. While their respective worlds may be immense, they’re closed ecosystems, and nothing can be transferred between them—not resources, skills, content, or friends.

So how do we move past this legacy of walled gardens to unlock the potential of the metaverse? As composability and interoperability become important concepts for metaverse-minded game developers, we will need to rethink how we handle the following:

- Identity. In the metaverse, players will need a single identity that they can carry with them between games and across game platforms. Today’s platforms insist that players have their own respective player profiles, and players must tediously rebuild their profile and reputation from scratch with each new game they play.

- Friends. Similarly, today’s games maintain separate friends lists—or at best, use Facebook as a de-facto source of truth. Ideally your network of friends would also follow you from game to game, making it easier to find friends to play with and share competitive leaderboard information.

- Possessions. Currently the items you acquire in one game cannot be transferred to or used in another game—and for good reason. Allowing a player to import a modern assault rifle to a medieval-era game might briefly be satisfying, but would quickly ruin the game. But with appropriate limits and restrictions, exchanging (some) items across games could open up new creativity and emergent play.

- Gameplay. Today’s games are intimately connected with the gameplay. For example, the entire joy of the “platformer” genre, with games like Super Mario Odyssey, is achieving mastery over the virtual world. But by opening games up, and allowing elements to be “remixed,” players can more easily “remix” new experiences and explore their own “what-if” narratives.

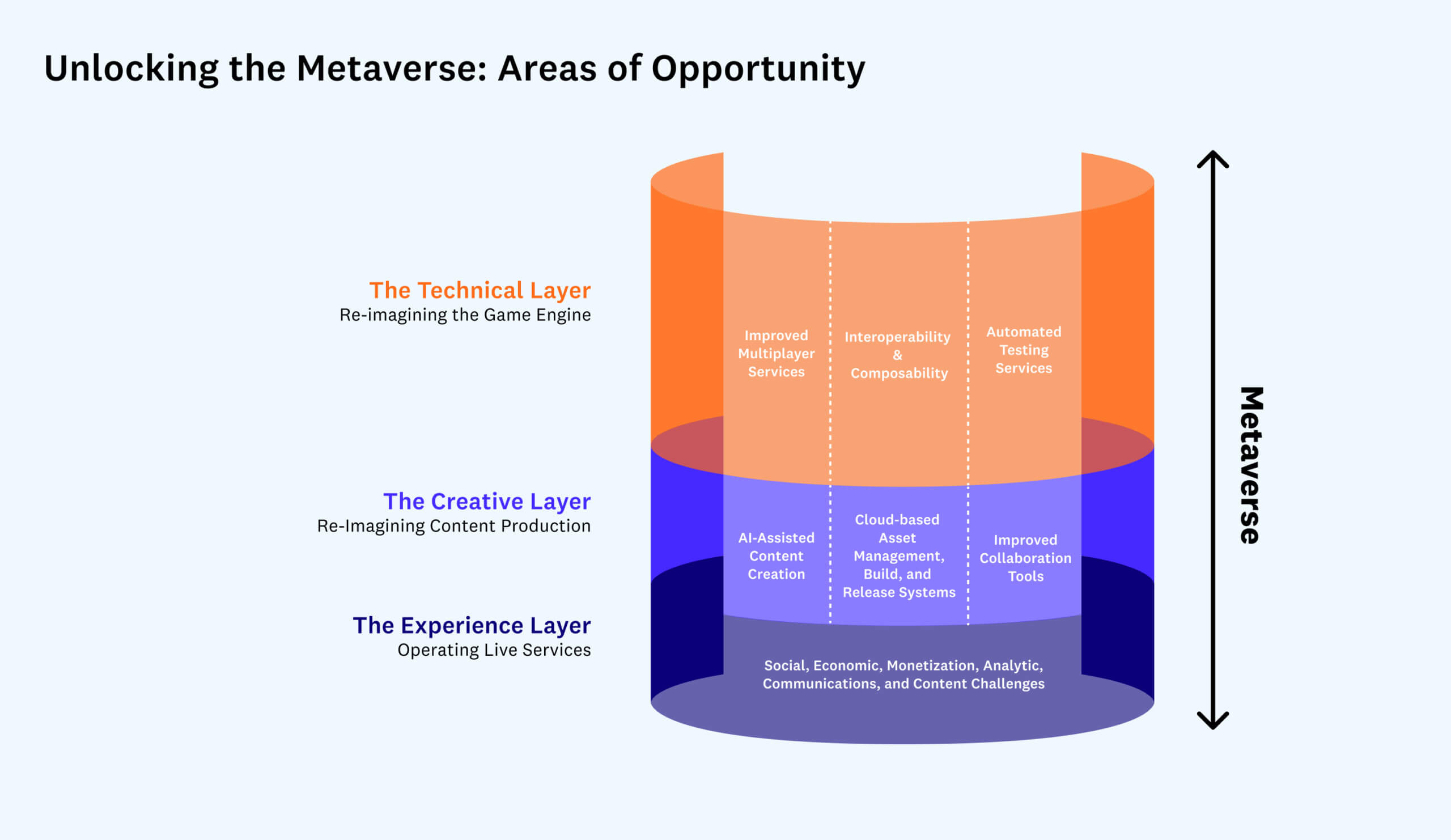

I see these changes happening at three clear layers of game development: the technical layer (game engines), the creative layer (content production), and the experience layer (live operations). At each layer, there are clear opportunities for innovation, which I’ll go into below.

Side note: Producing a game is a complex process involving many steps. Even more so than other forms of art, it’s highly nonlinear, requiring frequent looping back and iteration, since no matter how fun something may sound on paper, you can’t know if it’s actually fun until you play it. In this sense it’s more akin to choreographing a new dance, where the real work happens iteratively with dancers in the studio.

The expandable section below outlines the game production process for those who may be unfamiliar with its unique complexities.

[expand]

Pre-Production: Pre-production is where the vision and plan for the game are worked out.

- Design. Designers create a vision for the experience they are trying to create, imagining their desired game-play, and creating concept art sketches for what the experience may look like. Thought is given to what’s unique about this particular game, setting it apart from other games.

- Prototyping. Prototypes are built to try out these new ideas, focusing on the game-play, often with very rough graphics and sound. The goal is to iterate as quickly as possible, making changes and trying them out “in game,” to see what’s fun. An idea may sound fun on paper, but until you actually play it in game, it’s impossible to know.

- Planning. Once the core game-play is figured out, the rest of the game can be designed around it, including story elements and lists of characters and environments. Production schedules can be created.

Production:

Production is where the bulk of the content needed for launch is created. Games consist of three ingredients: code, art, and data, all of which must be built and integrated together, then managed through many iterations and revisions.

- Programming. Writing the code for a game is similar to writing the code for any application. Games typically have both a “front-end,” which is the actual app that is downloaded and runs on the gaming device, and also a “back-end,” which is the code that runs out on servers, typically in the cloud, each with their own unique testing and release challenges. Games are commonly built on top of “engines” such as Unity or Unreal, which provide common functionality such as rendering graphics, simulating physics, and playing audio, so the game creator can focus on those aspects which make their game unique.

- Content. Creating content for a game is often the most complex and time consuming part of production. The sheer number of “assets” needed for a large-scale game can be mind-boggling, and most assets are themselves built out of smaller assets. Consider something as simple as a rock placed in a world:

- The rock must be modeled by a 3D artist in a 3D package like Blender or Autodesk 3ds Max, similar to how a sculptor sculpts an object out of clay.

- The rock must be painted with a texture to make it look realistic, and is typically created by a 2D artist in a drawing program like Photoshop, often starting with a photograph.

- Other 2D textures may also be created to give the object more realism, providing information such as bumpiness, shininess, transparency, self-illumination, and more.

Characters, or items that move, are even more complex, as they need internal skeletons that describe how they can move. Animators must also create character animations for running, jumping, attacking, or even just standing around waiting, and props for characters to hold (like spears, guns, or backpacks). Meanwhile, sound designers must create matching sound effects, such as foot steps, that must be synchronized with animations to make the characters believable—and different sets of effects are required for different surfaces (dirt, grass, gravel, pavement, etc). Characters also often have separate facial animations, and mouth positions for lip-syncing.

Other assets needed include spoken dialog, music, particle effects (such as fires or explosions), and interface elements such as on-screen menus or status displays).

All of these assets are created in artist-friendly tools and saved as “source art” elements. They must then be combined together and turned into engine-friendly “in-game assets” that are optimized for display in real time and can be placed into an actual game level by a level designer.

- Data. Games also have extensive data needs, describing the thousands of little details for how the game will perform. How high do players jump? How powerful is a given weapon? How much experience must the player earn to go from level 4 to 5? How much damage does a particular monster dole out if it attacks you? How much money can you earn selling a certain fish in the market? All of these numbers must be painstakingly tuned to create a fun experience for the player—and even a single number being wrong can ruin the game’s balance and turn players off.

Testing:

Testing is a process that happens continuously during a game’s development, but the closer the game gets to launch, the higher the stakes. Most modern games are instrumented heavily with analytics, so game designers can measure the effectiveness of their efforts.

- Internal and external play testing. Play testing happens frequently during development. Most game studios try to get the game playable as quickly as possible, then maintain it in a playable state throughout the rest of production. Because you can’t know if something is fun until you play it, a big challenge in production is shrinking the time it takes from when an artist or designer makes a change, and when they can try out that change in game.

- Soft launch. It’s common, when a game is nearly done, to “soft launch” the game to a limited audience, either through a beta test program, or by releasing it in a single geography (like New Zealand or the Philippines). During this soft launch, the studio is carefully monitoring certain key metrics, such as “D30” retention (how many players are still playing the game 30 days after installing it) and ARPU (average revenue per user). It’s not uncommon for a game to spend six months or more in soft launch, being tuned and tweaked, before it’s deemed ready for launch.

Launch:

Launching a game is one of the most difficult moments for a game studio, as the marketing team battles to get mindshare and attention for this new game—while also making sure all these players trying it out for the first time have a great experience.

- Community Building. Building an audience for a game typically starts long before the game is launched, as the studio attempts to create a community around the game with sneak peaks, open testing periods, building buzz with key online influencers and streamers, and much more. These early communities are so critical to the success of a game that they are often called the “golden cohort.”

- Release. Getting the game into the hands of players typically means uploading it to various app stores on various devices, each with their own processes. It also means creating all the various assets needed, such as screenshots and descriptions, and making sure the back-end is ready for the expected load. For multiplayer games, having enough game servers to handle the expected load of players can be a real challenge, even with cloud computing, since cloud providers like AWS may be limited in how many servers they can make available at any given time—especially in smaller data centers.

Post-launch:

With a modern Game-as-a-Service, all the work building up to launch is just the beginning—after launch the real work begins as teams must maintain and update their games.

- LiveOps. Teams must continually invest in maintaining their games, or else players will inevitably leave to spend their time elsewhere. LiveOps includes everything from releasing new content, running sales and promotions, monitoring analytics to detect and correct problems, and running marketing campaigns to attract new players.

Events: One of the most important and effective post-launch activities is to host and run live events inside a game. Events can be anything from short-term promotions (“sale on gold coins!”) to multiday complex affairs (“Moon Festival dungeon opening up, this weekend only, with exclusive rare items for the first 100 players to finish!”). Running an event requires creating and testing the new content, promoting it to players, then following up afterwards with any prizes or rewards—ideally done as much as possible by the LiveOps team, with minimal involvement from engineers.[/expand]

The Technical Layer: Re-imagining the Game Engine

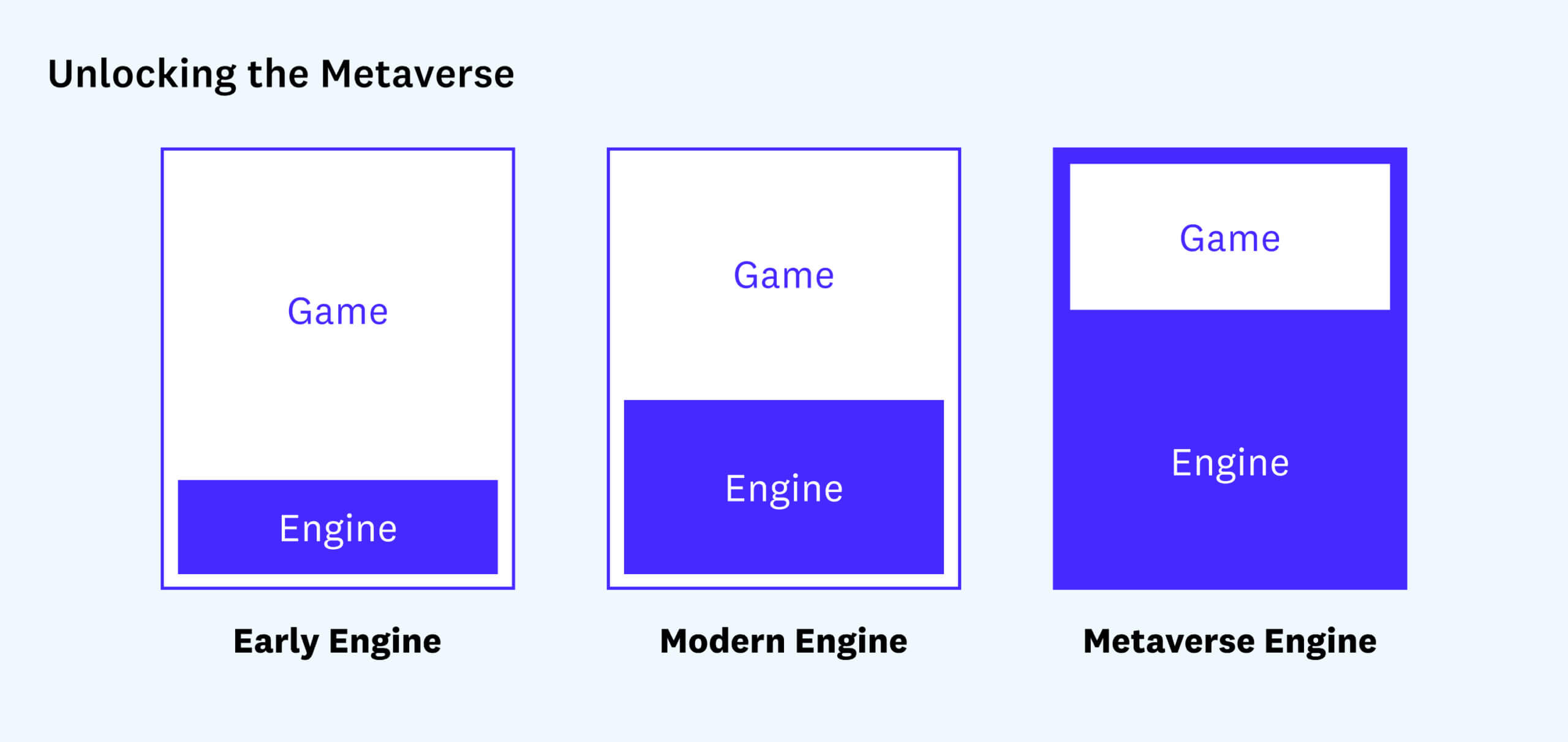

The core of most modern game development is the game engine, which powers the player’s experience and makes it easier for teams to build new games. Popular engines like Unity or Unreal provide a common functionality that can be reused across games, freeing up game creators to build the pieces that are unique to their game. This not only saves time and money, but it also levels the playing field, allowing smaller teams to compete with larger ones.

That said, the fundamental role of the game engine relative to the rest of the game hasn’t really changed in the last 20 years. While engines have upped the number of services they provide—expanding from just graphics renderings and audio playbacks to multiplayer and social services and post-launch analytics and in-game ads—the engines are still mostly shipped as libraries of code, wrapped up entirely by each game.

When thinking about the metaverse, however, the engine takes on a more important role. To break down the walls that separate one game or experience from another, it is likely that games will be wrapped and hosted within the engine, instead of the other way around. In this expanded view, engines become platforms, and communication between these engines will largely define what I think of as the shared metaverse.

Take Roblox for example. The Roblox platform provides the same key services as Unity or Unreal, including graphics rendering, audio playback, physics, and multiplayer. However, it also provides other unique services, like player avatars and identities that can be shared across its catalog of games; expanded social services, including a shared friends list; robust safety features to help keep the community safe; and tools and asset libraries to help players create new games.

Roblox still falls short as a metaverse, however, because it is a walled garden. While there is some limited sharing between games on the Roblox platform, there is no sharing or interoperability between Roblox and other game engines or game platforms.

To fully unlock the metaverse, game engine developers must innovate when it comes to 1) interoperability & composability, 2) improved multiplayer services, and 3) automated testing services.

Interoperability and composability

To unlock the metaverse and allow, for example, for Pokemon hunting in the Grand Theft Auto universe, these virtual worlds will require an unprecedented level of cooperation and interoperability. And while it’s possible that a single company could come to control the universal platform that powers a global metaverse, that’s neither desirable nor likely. Instead, it’s more likely that decentralized game engine platforms will emerge.

I can’t, of course, talk about decentralized technology without mentioning web3. Web3 refers to a set of technologies, built on blockchains and using smart contracts, that decentralize ownership by shifting control of key networks and services to their users/ developers. In particular, concepts like composability and interoperability in web3 are useful for solving some of the core issues faced in moving towards the metaverse, especially identity and possessions, and an enormous amount of research and development is going into core web3 infrastructure.

Nonetheless, while I believe web3 will be a critical component in reimagining the game engine, it is not a silver bullet.

The most obvious application of web3 technologies to the metaverse will likely be allowing users to purchase and own items in the metaverse, such as a plot of virtual real estate or clothes for a digital avatar. Because transactions written to blockchains are a matter of public record, purchasing an item as a non-fungible token (NFT) makes it theoretically possible to own an item and use it across multiple metaverse platforms, among several other applications.

However, I don’t think this will happen in practice until the following issues are addressed:

- Single-user identity, in which a player can move between virtual worlds or games with a single consistent identity. This is necessary for everything from match-making, to content ownership, to blocking trolls. One service trying to solve this problem is Hellō. They are a multi-stakeholder co-operative seeking to transform personal identity with a user-centric vision of identity, largely based on web2 centralized identities. There are others using a web3 decentralized identity model such as Spruce, which enables users to control their digital identity via wallet keys. Meanwhile, Sismo is a modular protocol that uses zero-knowledge attestations to enable decentralized identity curation, among other things.

- Universal content formats, so content can be shared between engines. Today each engine has its own proprietary format, which is necessary for performance. To exchange content between engines, however, standard open formats are needed. One such standard format is Pixar’s Universal Scene Description for film; another is NVIDIA’s Omniverse. Standards, however, will be required for all content types.

- Cloud-based content storage, so that the content needed for one game can be located and accessed by others. Today the content needed for a game is typically either packaged with the game as part of a release package, or provided for download via the web (and accelerated with a Content Delivery Network, or CDN). For content to be shared between worlds, a standard way to look up and retrieve this content is needed.

- Shared payment mechanisms, so metaverse owners have financial incentives to allow assets to pass between metaverses. Digital asset sales are one of the main ways platform owners are compensated, especially with the “free-to-play” business model. So to incentivize platform owners to loosen their grip, asset owners could pay a “corkage fee” for the privilege of using their asset in the platform. Alternatively, if the asset in question is famous, the metaverse might also be willing to pay the asset owner to bring their asset into their world.

- Standardized functionality, so a metaverse can know how a given item is meant to be used. If I want to bring my fancy sword into your game, and use it to kill monsters, your game needs to know that this is a sword—and not just a pretty stick. One way to solve this is to attempt to create a taxonomy of standard object interfaces that every metaverse can choose to support—or not. Categories could include weapons, vehicles, clothing, or furniture.

- Negotiated look and feel, so content assets can transform their look-and-feel to match the universe they are entering. For example, if I have a high-tech sports car, and I want to drive into your very strictly steampunk-themed world, my car might have to transform into a steam-powered buggy to be allowed in. This may be something my asset knows how to do, or it could be the responsibility of the metaverse world I am entering to provide the alternative look and feel.

Improved multiplayer game systems

One big area of focus is the importance of multiplayer and social features. More and more of today’s games are multiplayer, since games with social features out-perform single-player games by a wide margin. Because the metaverse will be entirely social by definition, it will be subject to all sorts of problems endemic to online experiences. Social games must worry about harassment and toxicity; they are also more prone to DDoS attacks from losing players and typically must operate servers in data centers around the world to minimize player lag and provide an optimal player experience.

Considering the importance of multiplayer features for modern games, there is still a lack of fully competitive off-the-shelf solutions. Engines like Unreal or Roblox, and solutions like Photon or PlayFab, provide those basics, but there are holes like advanced matchmaking that developers must fill for themselves.

Innovations to multiplayer game systems may include:

- Serverless multiplayer, whereby developers could implement authoritative game logic and have it automatically hosted and scaled out in the cloud, without having to worry about spinning up actual game servers.

- Advanced matchmaking, to help players quickly find other players of similar level to play against, including AI tools to help determine player skill and ranking. In the metaverse, this becomes even more important, since “matchmaking” becomes so much broader (e.g., “Find me another person to practice Spanish with,” and not just “Find me a group of players to raid a dungeon.”)

- Anti-toxicity and anti-harassment tools, to help identify and weed out toxic players. Any company hosting a metaverse will need to proactively worry about this, since online citizens won’t spend time in unsafe spaces—especially if they can just hop to another world with all of their status and possessions intact.

- Guilds or clans, to help players band together with other players, either to compete against other groups, or simply to have a more social shared experience. The metaverse will similarly be rife with opportunities for players to band together with other players to pursue shared goals, creating an opportunity for services such as creating or moderating a guild, as well as synchronization with external community tools like Discord.

Automated testing services

Testing is an expensive bottleneck when releasing any online experience, as a small army of game testers must repeatedly play through the experience to make sure everything works as expected, with no glitches or exploits.

Games which skip this step do so at their peril. Consider the recent launch of the highly anticipated game Cyberpunk 2077, which was loudly denounced by players due to the large number of bugs it launched with. Because the metaverse is essentially an “open world” game with no one set course, however, testing may be prohibitively expensive. One way to alleviate the bottleneck is to develop automated testing tools, such as AI agents that can play the game as a player might, looking for glitches, crashes, or bugs. A side benefit of this technology will be believable AI players, to either swap in for real players who unexpectedly drop out of multiplayer matches, or to provide early multiplayer “match liquidity” to reduce the time players must wait to start a match.

The innovations to automated testing services may include:

- Automated training of new agents, by observing real players interacting with the world. One benefit of this is that the agents will continue to get smarter and more believable the longer the metaverse is operating.

- Automated identification of glitches or bugs, along with a deep link to jump right to the point of the bug, so that a human tester can reproduce the issue and work to get it addressed.

- Swap AI-agents in for real players, so that if one player suddenly drops out of a multiplayer experience, the experience won’t come to an end for the other players. This feature also begs some interesting questions, like if players can “tag out” to an AI-counterpart at any time, or even “train” their own replacements to compete on their behalf. Will “AI-assisted” become a new category in tournaments?

The Creative Layer: Re-Imagining Content Production

As 3D rendering technology gets more powerful, the amount of digital content needed to create a game keeps increasing. Consider the latest Forza Horizon 5 racing game—this was the largest Forza ever to download, requiring more than 100Gb of disk space, up from 60Gb for Horizon 4. And that’s just the tip of the iceberg. The original “source art files,” the files created by the artists and used to create the final game, can be many times larger still. Assets grow because both the size and quality of these virtual worlds keep growing, with a higher level of detail and greater fidelity.

Now consider the metaverse. The need for high-quality digital content will continue to increase, as more and more experiences move from the physical world to the digital world.

This is already happening in the world of film and TV. The recent Disney+ show The Mandalorian broke new ground by filming on a “virtual set” running in the Unreal game engine. This was revolutionary, because it cut the time and cost of production, while simultaneously increasing the scope and quality of the finished product. In the future, I expect more and more productions to be shot this way.

Furthermore, unlike physical film sets that are usually destroyed after a shoot given the high storage costs of keeping them intact, digital sets can be easily stored for future re-use. In fact, it therefore makes sense to invest more, not less, money and build a fully realized world that can later be re-used to produce fully interactive experiences. Hopefully in the future, we will see these worlds made available to other creators to create new content set within those fictional realities, further fueling the growth of the metaverse.

Now consider how this content is created. Increasingly, it is created by artists distributed around the world. One of the lasting ramifications of Covid is a permanent push to remote development, with teams spread out across the world, often working from home. The benefit of remote development is clear—the ability to hire talent anywhere—but the costs are significant, including challenges with collaborating creatively, synchronizing the large number of assets needed to build a modern game, and maintaining the security of intellectual property.

Given these challenges, I see three large areas of innovation coming to digital content production: 1) AI-assisted content creation tools, 2) cloud-based asset management, build, and release systems, and 3) collaborative content generation.

AI-assisted content creation

Today, virtually all digital content is still built by hand, driving up the time and cost it takes to ship modern games. Some games have experimented with “procedural content generation” in which algorithms can help generate new dungeons or worlds, but building these algorithms can themselves be quite difficult.

A new wave of AI-assisted tools are coming, however, which will be able to help artists and non-artists alike create content more quickly, and at a higher quality, driving down the cost of content production, and democratizing the task of game production.

This is especially important for the metaverse, because virtually everyone will be called upon to be a creator—but not everyone can create world-class art. And by art, I’m referring to the entire class of digital assets, including virtual worlds, interactive characters, music and sound effects, and so forth.

Innovations within AI-assisted content creation will include conversion tools that can turn photos, videos, and other real-world artifacts into digital assets, such as 3D models, textures, and animations. Examples include Kinetix, which can create animations from video; Luma Labs, which creates 3D models from photos; and COLMAP, which can create navigable 3D spaces from still photos.

There will also be innovation within creative assistants that take direction from an artist, and iteratively create new assets. Hypothetic, for example, can generate 3D models from hand-drawn sketches. Inworld.ai and Charisma.ai both use AI to create believable characters that players can interact with. And DALL-E can generate images from natural language inputs.

One important aspect to using AI-assisted content creation as part of game creation will be repeatability. Since creators must frequently go back and make changes, it’s not enough to just store the output from an AI tool. Game creators must store the entire set of instructions that created that asset, so an artist can go back and make changes later, or duplicate the asset and modify it for a new purpose.

Cloud-based asset management, build, and release systems

One of the biggest challenges that game studios must face when building a modern video game is managing all of the content needed to create a compelling experience. Today this is a relatively unsolved problem with no standardized solution; each studio must cobble together their own solution.

To give a sense for why this is such a hard problem, consider the sheer amount of data involved. A large game can require literally millions of files of all different types, including textures, models, characters, animations, levels, visual effects, sound effects, recorded dialogue, and music.

Each of these files will change repeatedly during production, and it’s necessary to keep copies of each of these variations, in case the creator needs to backtrack to an earlier version. Today, artists often cope with this need by simply renaming files (e.g., “forest-ogre-2.2.1”), which results in a proliferation of files. And because of the nature of these files, this takes up a lot of storage space since they’re typically large and hard to compress, and each revision must be stored separately. This is unlike source code, where it’s possible to store just the changes for every revision themselves, which is very efficient. This is because with many content files, like artwork, changing even a small part of the image can change virtually the entire file.

Furthermore, these files do not exist in isolation. They are part of an overall process typically called the content pipeline, which describes how all of these individual content files come together to create the playable game. During this process, the “source art” files, which are created by artists, are converted and assembled through a series of intermediate files into the “game assets,” which are then used by the game engine.

Today’s pipelines are not very smart and are not generally aware of the dependencies that exist between assets. The pipeline typically doesn’t know, for example, the particular texture of a 3D basket that is held by a specific farmer character, who lives within that level. As a result, whenever any asset is changed, the entire pipeline must be rebuilt to ensure that all changes are swept up and incorporated. This is a time-consuming process, and can take several hours or more, slowing down the pace of creative iteration.

The needs of the metaverse will exacerbate these existing issues, and create some new ones. For example, the metaverse is going to be large—larger than the largest games today—so all the existing content storage issues apply. Additionally, the “always-on” nature of the metaverse means that new content will need to be streamed directly into the game engine; it won’t be possible to “stop” the metaverse to create a new build. The metaverse will need to be able to update itself on the fly. And to realize composability goals, remote and distributed creators will need ways to access source assets, create their own derivatives, and then share them with others.

Addressing these needs for the metaverse will create two main opportunities for innovation. First, artists need an Github-like, easy-to-use asset management system that will give them the same level of version control and collaborative tools that developers currently enjoy. Such a system would need to integrate with all of the popular creator tools, such as Photoshop, Blender, and Sound Forge. Mudstack is one example of a company looking at this space today.

Secondly, there is much to be done with content pipeline automation, which can modernize and standardize the art pipeline. This includes exporting source assets to intermediate formats and building those intermediate formats into game-ready assets. An intelligent pipeline would know the dependency graph and would be capable of incremental builds, such that when an asset is changed, only those files with downstream dependencies would be rebuilt—dramatically reducing the time it takes to see the new content in-game.

Improved collaboration tools

Despite the distributed, collaborative nature of modern game studios, many of the professional tools used in the game production process are still centralized, single-creator tools. For example, by default, both the Unity and Unreal level editors only support a single designer editing a level at a time. This slows down the creative process, since teams cannot work together in parallel on a single world.

On the other hand, both Minecraft and Roblox support collaborative editing; this is one of the reasons why these consumer platforms have become so popular, despite their lack of other professional features. Once you’ve watched a group of kids building a city together in Minecraft, it’s impossible to imagine wanting to do it any other way. I believe collaboration will be an essential feature of the metaverse, allowing creators to come together online to build and test their work.

Overall, collaboration on game development will become real-time across almost all aspects of the game creation process. Some of the ways in which collaboration may evolve in order be unlocked in the metaverse include:

- Real-time collaborative world building, so multiple level designers or “world builders,” can edit the same physical environment at the same time and see each other’s changes in real time, with full versioning and change tracking. Ideally a level designer should be able to seamlessly toggle between playing and editing for the most rapid iteration. Some studios are experimenting with this using proprietary tools, such as Ubisoft’s AnvilNext game engine, and Unreal has experimented with real-time collaboration as a beta-feature that was originally built to support TV and film production.

- Real-time content review and approval, so teams can experience and discuss their work together. Group discussion has always been a critical part of the creative process. Movies have long had “dailies” during which production teams can review each day’s work together. Most game studios have a large room with a big screen for group discussion. But the tools for remote development are far weaker. Screen-sharing in tools like Zoom just aren’t high fidelity enough for an accurate review of digital worlds. One solution for games may be a form of “spectator mode” in which a whole team can login and see through the eyes of a single player. Another is to improve the quality of screen-sharing, trading off less compression for higher fidelity, including faster frame rates, stereo sound, more accurate color matching, and the ability to pause and annotate. Such tools should also be integrated with task tracking and assignment. Companies trying to solve this for film include frame.io and sohonet.

- Real-time world tuning, so designers can adjust any of the thousands of parameters that define a modern game or virtual world and experience the results immediately. This process of tuning is critical to creating a fun, well-balanced experience, but typically these numbers are hidden away in spreadsheets or configuration files that are hard to edit and impossible to adjust in real time. Some game studios have tried using Google Sheets for this purpose, whereby changes made to configuration values can be immediately pushed out to a game server to update how the game behaves, but the metaverse is going to require something more robust. A side benefit of this feature is that these same parameters can also be modified for the purpose of a live event, or a new content update, making it easier for non-programmers to create new content. For example, a designer could create a special event dungeon and stock it with monsters that are twice as hard to defeat as usual, but who drop unusually good rewards.

- A virtual game studio that lives entirely in the cloud, whereby members of the game creator team (artists, programmers, designers, etc.) can login from anywhere, on any sort of device (including a low-end PC or tablet), and have instant access to a high-end game development platform along with the full library of game assets. Remote desktop tools like Parsec may play a role in making this possible, but this goes beyond just remote desktop capabilities to include how creative tools are licensed and how assets are managed.

The Experience Layer: Re-imagining Operating Live Services

The final layer of retooling for the metaverse involves creating the necessary tools and services to actually operate a metaverse itself, which is arguably the hardest part. It’s one thing to build an immersive world, and it’s quite another thing to run it 24/7, with millions of players across the globe.

Developers must contend with:

- The social challenges of operating any large-scale municipality, which may be filled with citizens who won’t always get along and who will need disputes adjudicated.

- The economic challenges of effectively running a central bank, with its ability to mint new currencies and monitor currency sources and sinks in order to keep inflation and deflation in check.

- The monetization challenges of running a modern ecommerce website, with thousands or even millions of items for sale, and the adjacent need for offers, promotions, and in-world marketing tools.

- The analytic challenges of understanding what’s happening across their sprawling world, in real time, so they can be alerted to problems quickly before they escalate out of hand.

- The communication challenges of engaging with their digital constituents, either singly or en masse, and in any language, since a metaverse is (in principle) global.

- The content challenges of making frequent updates, to keep their metaverse constantly growing and evolving.

To deal with all of these challenges, companies need well-equipped teams that have access to an extensive level of backend infrastructure and the necessary dashboards and tools to allow them to operate these services at scale. Two areas in particular that are ripe for innovation are LiveOps services and in-game commerce.

LiveOps as a field is still in its infancy. Commercial tools such as PlayFab, Dive, Beamable, and Lootlocker implement only portions of a full LiveOps solution. As a result, most games still feel forced to implement their own LiveOps stack. An ideal solution would include: a live events calendar, with the ability to schedule events, forecast events, and create event templates or clone previous events; personalization, including player segmentation, targeted promotions, and offers; messaging, including push notifications, email, and an in-game inbox, and translation tools to communicate with users in their own language; notification authoring tools, so non-programmers can author in-game pop-ups and notifications; and testing to simulate upcoming events or new content updates, including a mechanism to roll back changes if there are problems.

More developed but still in need of innovation is in-game commerce. Considering that nearly 80% of digital game revenue comes from selling items or other microtransactions in games that are otherwise free-to-play, it’s remarkable that there aren’t better off-the-shelf solutions for managing an in-game economy—a Shopify for the metaverse.

The solutions that exist today each solve just part of the problem. An ideal solution needs to include item catalogs, including arbitrary metadata per item; app store interfaces for real-money sales; offers and promotions, including limited time offers and targeted offers; reporting and analytics, with targeted reports and graphs; user-generated content, such that games can sell content created by their own players, and pay a certain percentage of that revenue back to those players; advanced economy systems, such as item crafting (combining two items to create a third), auction houses (so players can sell items to each other), trading, and gifting; and full integration with the world of web3 and the blockchain.

What’s Next: Transforming Game Development Teams

In this article, I have shared a vision for how games will transform as new technologies open up composability and interoperability between games. It is my hope that others within the game community share my excitement for the potential that is yet to come, and that they are inspired to join me in building the new companies needed to unleash this revolution.

This coming wave of change will do more than provide opportunities for new software tools and protocols. It will change the very nature of game studios, as the industry moves away from monolithic single studios and towards increased specialization across new horizontal layers.

In fact, I think that in the future, we will see greater specialization in the game-production process. I also think we will see the emergence of:

- World builders who specialize in creating playable worlds, both believable and fantastic, that are populated with creatures and characters appropriate for that world. Consider the incredibly detailed version of the Wild West as built for Red Dead Redemption. Instead of investing so much in that world for a single game, why not re-use that world to host many games? And why not continue investing in that world so it grows and evolves, shaping itself over time to the needs of those games?

- Narrative designers who craft compelling interactive narratives set within these worlds, filled with story arcs, puzzles, and quests for players to discover and enjoy.

- Experience creators who build world-spanning playable experiences that are focused on gameplay, reward mechanisms, and control schemes. Creators who can bridge between the real world and the virtual world will be especially valuable in the coming years as more and more companies try to bring portions of their existing operations into the metaverse.

- Platform builders who provide the underlying technology used by the specialists listed above to do their work.

The team at a16z Games and I are excited to be investing in this future, and I can’t wait to see the incredible levels of creativity and innovation that will be unleashed by these changes transforming our industry. Games are already the single largest sector of the entertainment industry, and yet are poised to grow even larger as more and more sectors of the economy move online and into the metaverse.

And we haven’t even touched on some of the other exciting new advances that are coming, such as Apple’s new augmented reality headset or Meta’s recently announced new VR prototypes or the introduction of 3D technology into the web browser with WebGPU,

There has truly never been a better time to be a creator.