The COVID-19 pandemic isn’t over and it might yet kill more than ten million people worldwide. From the beginning, the institutional response has been lethargic. While officials (including, regrettably, prominent scientific officeholders) provided public assurances, the U.S. did virtually no surveillance sequencing in January or February, even as outbreaks took hold in China and Italy. The CDC botched the initial diagnostic test. Even once working tests existed, they remained invariably slow and hard to obtain for members of the public. We didn’t get off on a good foot.

As the first U.S. lockdowns commenced in March last year, we reached out to various top scientists, and were surprised to learn that funding for COVID-19 related science was not readily available. We expected the U.S.’s immense government funding systems to be unleashed, with decisions made in days if not in hours. This is what happened during World War II, which killed fewer Americans.

Instead, we found that scientists — among them the world’s leading virologists and coronavirus researchers — were stuck on hold, waiting for decisions about whether they could repurpose their existing funding for this exponentially growing catastrophe. It’s worth visiting the National Institutes of Health (NIH)’s application overview for this, launched in March 2020, to get a tangible sense for what those seeking emergency funding were facing.

And so, in early April, we decided to start Fast Grants, which we hoped could be one of the faster sources of emergency science funding during the pandemic. We had modest hopes given our inexperience and lack of preparation, but we felt that the opportunity to provide even small accelerations would be worthwhile given the scale of the disaster.

The original vision was simple: an application form that would take scientists less than 30 minutes to complete and that would deliver funding decisions within 48 hours, with money following a few days later.

We assembled the program under the auspices of the Mercatus Center at George Mason University, raised some initial funding, and put together the website. About 10 days after having the original idea, we launched. To help identify the most immediately deserving recipients, our criteria were quite stringent: eligibility was restricted to “principal investigators” (that is, scientists running their own labs or research programs) who were already working on COVID-19-related research (rather than those who merely had ideas as to how they could be).

Given these criteria, we expected to receive at most a few hundred applications. Within a week, however, we had 4,000 serious applications, with virtually no spam. Within a few days, we started to distribute millions of dollars of grants, and, over the course of 2020, we raised over $50 million and made over 260 grants. All of this was done at a cost of less than 3% Mercatus overhead, thanks in part to infrastructure assembled for Emergent Ventures, which was also designed to make speedy and efficient (non-biomedical) grants.

The grant applications were refereed by a team of 20 mostly early-career individuals drawn from top universities and labs, who worked hard to vet and review the more than 6,000 applications received over the course of the program. Every funded application was reviewed by at least three reviewers, but unanimity was not required: the goal was to identify projects that at least one or two reviewers thought were very much worth funding. (Successful NIH grant applications, on the other hand, are typically reviewed by 10-20 scientists and program officers across three phases of review.)

“Let’s do it” was then the basic attitude and we were much more worried about missing out on supporting important work than looking silly.

The first round of grants were given out within 48 hours. Later rounds of grants, which often required additional scrutiny of earlier results, were given out within two weeks. These timelines were much shorter than the alternative sources of funding available to most scientists. Grant recipients were required to do little more than publish open access preprints and provide monthly one-paragraph updates. We allowed research teams to repurpose funds in any plausible manner, as long as they were used for research related to COVID-19. Besides the 20 reviewers, from whom perhaps 20-40 hours each was required, the total Fast Grants staff consisted of four part-time individuals, each of whom spent a few hours per week on the project after the initial setup.

We plan to do a closer look at these projects in the future, but some of the noteworthy work supported by Fast Grants includes:

- The SalivaDirect team at Yale demonstrated that saliva-based COVID-19 tests can work just as well as those using nasopharyngeal swabs. This work was critical to ameliorate the swab and trained clinician (for swab-based test administration) shortages at the outset of the pandemic.

- We funded a number of clinical trials for repurposed drugs. The interim analysis for one of these trials (just completed) suggests that one common generic drug may reduce hospitalization from COVID-19 by about 40%. We expect an announcement here in the near future.

- Research into the causes of differential outcomes to COVID-19 infection based on underlying genetic factors and immune response profiles.

- Research into “Long COVID”, which is now being followed up with a clinical trial on the ability of COVID-19 vaccines to improve symptoms.

- Tracking the spread of new COVID-19 “variants of concern” before other sources of funding had stepped up at a diversified regional portfolio of sequencing labs.

About 356 papers found via Google Scholar credit Fast Grants so far. Fast Grants awardees published a number of highly cited papers, including Lucas et al (Nature, 2020) on misfired immune responses in patients that develop severe COVID-19; Gordon et al (Nature, 2020) on the virus-host protein interactions that suggest new therapeutic strategies; Robbiani et al (Nature, 2020) on the potency of antibody responses in recovering COVID-19 patients; and Korber et al (Cell, 2020) on tracking the spread of spike variants that may be more transmissible.

Some of the other Fast Grants investments were speculative, and may (or may not) pay dividends in the future, or for the next pandemic. Examples include:

- Work on a possible pan-coronavirus vaccine at Caltech.

- Work on a possible pan-enterovirus (another class of RNA virus) drug at Stanford University that has now raised subsequent funding.

- Multiple grants going to different labs working on CRISPR-based COVID-19 at-home testing. One example is smartphone-based COVID-19 detection, being worked on at UC Berkeley and Gladstone Institutes.

Of course, lots of our grants do not appear to have led to useful discoveries, but that is likely the case under any plausible funding mechanism and it even can be taken as a sign of risk-taking in project selection. A longer list of grant recipients can be found at fastgrants.org (though not all recipients opted to be listed), and it is certainly possible that the most important grants we made will not be evident for years to come.

What surprised us?

We were pleasantly surprised that so many donors were willing to support a completely unproven project. This might sound anodyne but it’s worth emphasizing: a number of individuals made seven-figure contributions without ever speaking with us. We didn’t expect this! Some donors (though not all) are listed on our website. We were heartened that so many individuals had the courage to act quickly despite the risk of looking foolish if Fast Grants flopped.

We found it interesting that relatively few organizations contributed to Fast Grants. The project seemed a bit weird and individuals seemed much more willing to take the “risk”. (That said, a few institutions did contribute substantial amounts, and we’re very grateful to those that did.) Beyond Fast Grants, we suspect that a lot of valuable projects in the world are blocked on something like this: the willingness of funders (especially institutional funders) to support something unusual simply on the basis of belief in the individuals involved. Too often, the de facto goal of funders is to find established things that look like everything else — it is typically easier to defend supporting a long-established institution. But, of course, the most valuable opportunities will often be those that look quite different, and a structural bias towards familiarity can easily militate against innovation.

We were very positively surprised at the quality of the applications. We had an open call for applications and a very short application form. Despite receiving thousands of applications, a small team of dedicated reviewers was able to grade them in a matter of days. In this, we were greatly assisted by some software for assigning reviews, tracking application scores, etc., that we built in-house. But the speed was enabled in large part by an unapologetic effort to rapidly identify promising projects rather than to optimize for perfection or fairness. While we believe that our reviewers generally did a very good job (we doubt that we’d have changed our decisions a great deal given increased reviewer bandwidth), we wouldn’t suggest that 48-hour turnaround is optimal in all circumstances. However, we do think the possibility of such turnarounds hints that substantially faster decisions may be feasible by other grantmaking bodies.

We initially imagined that the scientists at the top universities would swiftly receive abundant funding, and that Fast Grant’s comparative advantage might lie in identifying promising work outside of the most prestigious institutions. Even though we did not formally count institution environment and reputation at all in our assessment methodology (unlike, for example, NIH grant scoring processes), we were surprised to see that a large fraction of our grants went to people at top twenty institutions, with major recipient institutions including Berkeley, Stanford, MIT, and UCSF. Furthermore, many of these Fast Grant recipients had received minor to no other funding at the time of their application. We didn’t expect people at top universities to struggle so much with funding during the pandemic.

To better understand these funding challenges, we recently ran a survey of Fast Grants recipients, asking how much their Fast Grant accelerated their work. 32% said that Fast Grants accelerated their work by “a few months”, which is roughly what we were hoping for at the outset given that the disease was killing thousands of Americans every single day.

In addition to that, however, 64% of respondents told us that the work in question wouldn’t have happened without receiving a Fast Grant.

For example, SalivaDirect, the highly successful spit test from Yale University, was not able to get timely funding from its own School of Public Health, even though Yale has an endowment of over $30 billion. Fast Grants also made numerous grants to UC Berkeley researchers, and the UC Berkeley press office itself reported in May 2020: “One notably absent funder, however, is the federal government. While federal agencies have announced that researchers can apply to repurpose existing funds toward Covid-19 research and have promised new emergency funds to projects focused on the pandemic, disbursement has been painfully slow. …Despite many UC Berkeley proposals submitted to the National Institutes of Health since the pandemic began, none have been granted.” [Emphasis ours.]

That so many first rate researchers say that they would have been unable to pursue their COVID-related work without this support was a dismaying surprise.

One of the most obvious strategies for mitigating any new infectious disease is to find an existing drug that’s effective at inhibiting it. There are about 1,800 existing FDA-approved drugs — what if one of them works against COVID-19? It is relatively straightforward (albeit logistically complex) to screen many drugs in tissue culture to identify some that may mitigate the virus. These broad screens will most likely yield a set of plausible drug candidates that you can then test in humans. While the specifics depend on the drug in question, these trials can be pretty safe — after all, you’re drawing on pre-approved drugs.

To this end, we funded a number of drug trials in the U.S. However, we were disappointed that very few trials actually happened. This was typically because of delays from university institutional review boards (IRBs) and similar internal administrative bodies that were consistently slow to approve trials even during the pandemic. (The problems with IRBs have been covered elsewhere.) This is an instance of a broader theme: we were surprised that many entities continued with something close to “business as usual” rather than switching to emergency pandemic mode. For example, university administrative bodies (such as Environment, Health, and Safety departments) were often slow to approve important lab work.

Institutional barriers aside, clinical trial enrollment, management, and execution remains highly inefficient and expensive. It is extremely difficult to quickly and cheaply run human clinical trials, even if the drug being tested is already known to be safe. As a result, more than a year into the pandemic, there are completed U.S. clinical trials for just 10 drugs designed to treat COVID-19, which is a meager haul given the potential value of effective treatments. Making human clinical trials cheaper and quicker is probably one of the biggest opportunities for improving U.S. healthcare both during and beyond pandemics.

We were surprised that some obviously worthy entities were left undersupported. For example, Addgene, a well-known nonprofit that supplies critical reagents to scientists undertaking molecular biology research, was left critically underfunded last spring/summer amid lab shutdowns. Interruption of Addgene’s supplies could have slowed or halted various global scientific efforts targeting COVID-19, since Addgene distributes critical reagents for coronavirus-related research. We were, fortunately, able to make a grant that helped ensure their continued operation. On a different front, as numerous variants of concern — such as B.117 and B.1.351 — took hold late in 2020 and in early 2021, we were surprised that the U.S. was engaging in very little surveillance sequencing with sufficiently fast turnaround to detect their real-time spread. Fast Grants funded a number of top sequencing labs and helped enable roughly a sixfold increase in U.S. surveillance sequencing around the beginning of 2021.

A common theme across all of these is that fairly obvious opportunities were not pursued by incumbent institutions. We give examples of actions Fast Grants took not to indicate some kind of supposed brilliance but rather to emphasize the opposite: Fast Grants pursued low-hanging fruit and picked the most obvious bets. What was unusual about it was not any cleverness in coming up with smart things to fund, but just finding a mechanism for actually doing so. To us, this suggests that there are probably too few smart administrators in mainstream institutions trusted with flexible budgets that can be rapidly allocated without triggering significant red tape or committee-driven consensus.

This lack of empowerment touches upon broader challenges of institutional judgment and institutional courage that have been consistent features during the pandemic. The repeated failures of major bodies, including the CDC and the WHO, have been described at length elsewhere. Smart individuals have been a more reliable source of advice than any prominent institution. High-quality institutional judgment — where evidence is properly weighed and reassessed as new evidence comes to light — has too often been in short supply. In a related fashion, institutions have been consistently hesitant to deviate from their regular, non-pandemic practices. IRBs, universities, and funding agencies remained slow. The FDA failed to approve rapid tests in a timely fashion. The EU and many other political units were too slow in funding and purchasing vaccines. Some agencies argued against the hugely successful British Recovery Trials because they thought an expedited process would be unfair. We think these can be viewed as failures of institutional courage. We have no doubt that many individuals within these bodies would have preferred to shift into “pandemic mode”, but something about the institutional sociology clearly made doing so difficult. Why exactly did so many of the organizations we should have been able to depend on fare so poorly? We think this question deserves comprehensive investigation.

Lastly, we were definitely surprised (and enormously relieved) at the speed and success of vaccine development. The path to vaccines ended up being a good demonstration of the synergy between foundational research, the pharmaceutical/biotech industries, and both private and public funders. We would not have had vaccine candidates as quickly as we did without a great deal of basic science work painstakingly pursued (sometimes for decades) by people like Katalin Karikó, Kizzmekia Corbett, Jason McLellan, and many hundreds of others. While much about our science funding mechanisms could be better, we shouldn’t lose sight of what worked. This research ultimately was supported and that is no small feat. The funders involved (the NIH included) deserve our great gratitude. On the translation front, Moderna, BioNTech, and Novavax, creators of the vaccine candidates that performed best in clinical trials, all started out as privately backed biotech startups that relied on risk-tolerant funders, underscoring the importance of a vibrant private ecosystem.

More broadly, in highlighting some of our surprise at what worked poorly during the pandemic, we don’t want our commentary to come across as one-sided. Many things worked well. Operation Warp Speed, an interagency project that the NIH and FDA both participated in, was an excellent and successful example of bold action and also institutional courage. We were able to produce vaccines as quickly as we did in part because the NIH funded three grants at UT Austin before 2020; thanks to these, we had a stabilized spike protein ready to go. This protein sequence was employed in both the Moderna and BioNTech mRNA vaccines. There are plenty of other examples of critical work that was supported in advance of it being acutely needed in 2020. It’s also important to note a lot of the unglamorous infrastructure that enabled science to progress quickly during the pandemic, such as databases maintained by the National Center for Biotechnology Information (NCBI, part of the U.S. National Library of Medicine), are enabled and funded by the NIH and other public funding bodies.

What does Fast Grants tell us about science funding models?

We think that the pandemic demonstrates both the strength and weakness of our current science funding models. As a society, we have great commitment to funding science, and our public bodies support a rich ecosystem of fantastic researchers. The scientific discoveries that will bring an end to the pandemic are happening.

However, this support for science exists in a monocultural and generally conservative form. The entities involved in science funding, most notably the NIH, demand long applications and subject those applications to multiple stages of administrative review, written and in-person peer review, program officer review, advisory council review, and even council of councils review. Consensus plays a heavy role. Scientists are discouraged from pursuing research outside of their regular fields and there is a strong preference for funding late-career rather than younger individuals. It is difficult for these bodies, such as the NIH, to adapt as circumstances change.

Even if one believes that NIH-like models are the best system for funding much of the scientific establishment, it’s hard to believe that they should be so dominant. Wouldn’t a diversity of approaches and mechanisms be preferable? Isn’t experimentation the very essence of science?

To get a more specific sense for the character of these problems (anecdotes are common but only go so far), we ran a survey of Fast Grants recipients, and asked some broader questions about their views on science funding.

57% of respondents told us that they spend more than one quarter of their time on grant applications. This seems crazy. We spend enormous effort training scientists who are then forced to spend a significant fraction of their time seeking alms instead of focusing on the research they’ve been hired to pursue.

The adverse consequences of our funding apparatus appear to be more insidious than the mere imposition of bureaucratic overhead, however.

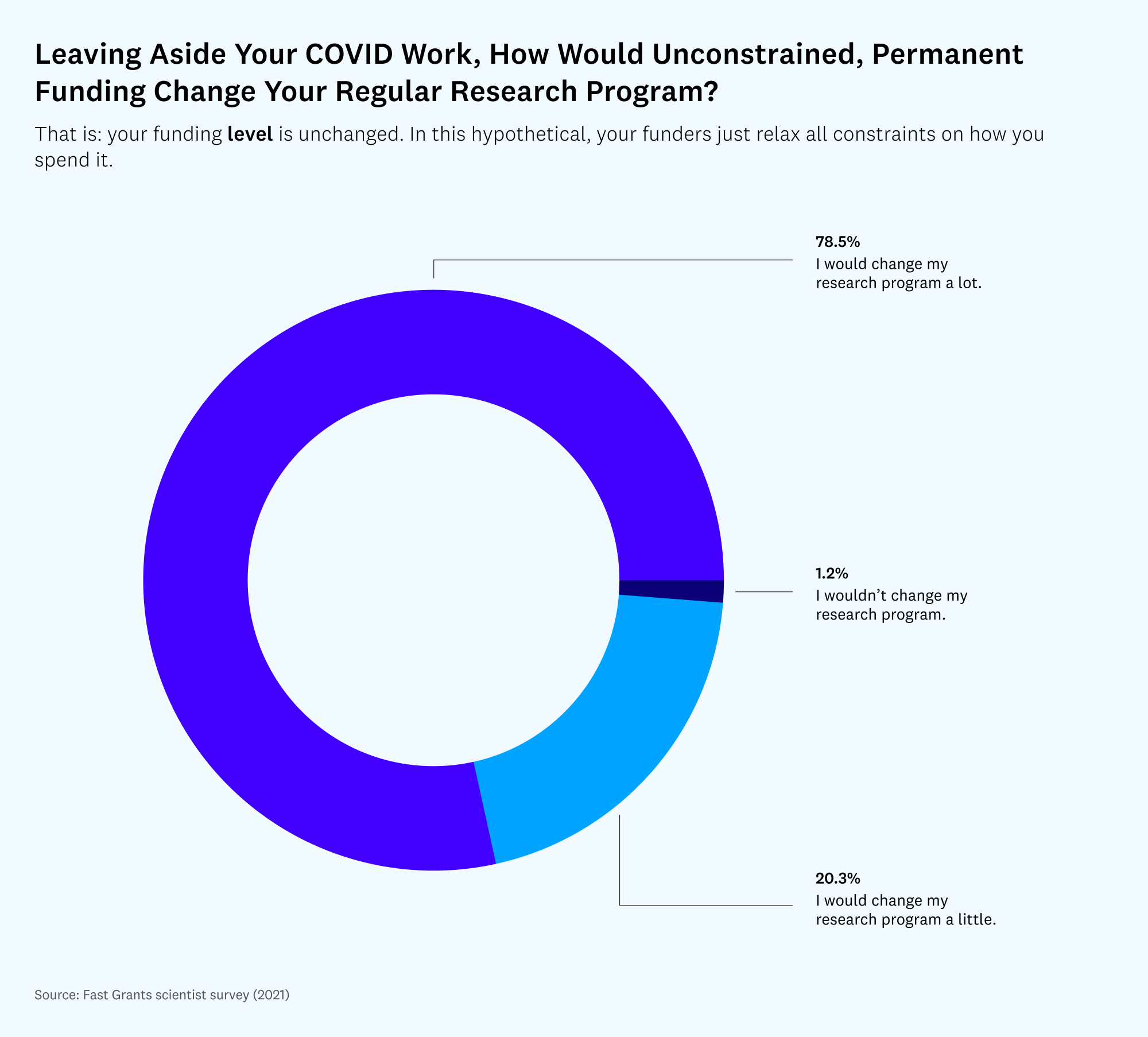

In our survey of the scientists who received Fast Grants, 78% said that they would change their research program “a lot” if their existing funding could be spent in an unconstrained fashion. We find this number to be far too high: the current grant funding apparatus does not allow some of the best scientists in the world to pursue the research agendas that they themselves think are best.

Scientists are in the paradoxical position of being deemed the very best people to fund in order to make important discoveries but not so trustworthy that they should be able to decide what work would actually make the most sense!

We all want more high-impact discoveries. 81% percent of those who responded said their research programs would become more ambitious if they had such flexible funding. 62% said that they would pursue work outside of their standard field (which the NIH explicitly discourages), and 44% said that they would pursue more hypotheses that others see as unlikely (which, as a result of its consensus-oriented ranking mechanisms, the NIH also selects against).

Many people complain that modern science is too frequently focused on incremental discoveries. To us, this survey makes clear that such conservatism is not the preference of the scientists themselves. Instead, we’ve inadvertently built a system that clips the wings of the world’s smartest researchers, and this is a long-term mistake.

* * *

It’s hard to judge the ultimate significance of Fast Grants. Would the pandemic have unfolded any differently if Fast Grants hadn’t happened? Most importantly, Fast Grants didn’t change the vaccine timeline, and vaccines were clearly the most important component of the response. That said, we think that clinical and testing work we funded may have accelerated improvements in a few key areas. We’re hopeful that some of the work we funded may yet bear significant fruit. We’ll never know the counterfactual, but our best judgment is that some quite important work was accelerated by perhaps six months. (Even though a majority of Fast Grants recipients tell us that the work wouldn’t have happened without its support, it is quite possible that most would have found some way to raise the money eventually.) If this assessment is true, we consider Fast Grants a great success.

Perhaps the most important thing we have learned doing Fast Grants is simply that alternative models of science funding can work. José Luis Ricón has written extensively about various science funding models. The macro conclusion is that we don’t yet know what works or which combination of structures would make most sense. The mainstream science-funding model, as exemplified by the NIH, assumes that slow is good or at least tolerable; or, more generously, that a deliberative and consensus-based process is ideal. According to the NIH, a grant application will typically result in a decision after something between 200 and 600 days.

To us, Fast Grants hints that streamlining the application process might not only produce faster discoveries, but also produce better long-term outcomes by giving scientists more time to do actual science — giving research teams the flexibility to go where the work leads them. This would unlock more ambitious and high-impact research that is otherwise constrained by structural barriers.

We asked Fast Grants recipients how well they think incumbent science funding institutions responded over the course of the pandemic. On average, they graded them “5” on a 10-point scale. We think this captures an important directional truth, namely, that we could do much better.

However, this disappointment from the scientists was tempered by optimism. When asked how much the pandemic changed their view of how quickly things can happen in science, the average score was “9.”

To us, these results suggest that scientists want something better, and are hopeful that better is in fact achievable. We hope that many other actors will try their own experiments, report the results, and find important and effective new ways to enable the discoveries that the world still urgently needs.

Views expressed in “posts” (including articles, podcasts, videos, and social media) are those of the individuals quoted therein and are not necessarily the views of AH Capital Management, L.L.C. (“a16z”) or its respective affiliates. Certain information contained in here has been obtained from third-party sources, including from portfolio companies of funds managed by a16z. While taken from sources believed to be reliable, a16z has not independently verified such information and makes no representations about the enduring accuracy of the information or its appropriateness for a given situation.

This content is provided for informational purposes only, and should not be relied upon as legal, business, investment, or tax advice. You should consult your own advisers as to those matters. References to any securities or digital assets are for illustrative purposes only, and do not constitute an investment recommendation or offer to provide investment advisory services. Furthermore, this content is not directed at nor intended for use by any investors or prospective investors, and may not under any circumstances be relied upon when making a decision to invest in any fund managed by a16z. (An offering to invest in an a16z fund will be made only by the private placement memorandum, subscription agreement, and other relevant documentation of any such fund and should be read in their entirety.) Any investments or portfolio companies mentioned, referred to, or described are not representative of all investments in vehicles managed by a16z, and there can be no assurance that the investments will be profitable or that other investments made in the future will have similar characteristics or results. A list of investments made by funds managed by Andreessen Horowitz (excluding investments for which the issuer has not provided permission for a16z to disclose publicly as well as unannounced investments in publicly traded digital assets) is available at https://a16z.com/investments/.

Charts and graphs provided within are for informational purposes solely and should not be relied upon when making any investment decision. Past performance is not indicative of future results. The content speaks only as of the date indicated. Any projections, estimates, forecasts, targets, prospects, and/or opinions expressed in these materials are subject to change without notice and may differ or be contrary to opinions expressed by others. Please see https://a16z.com/disclosures for additional important information.