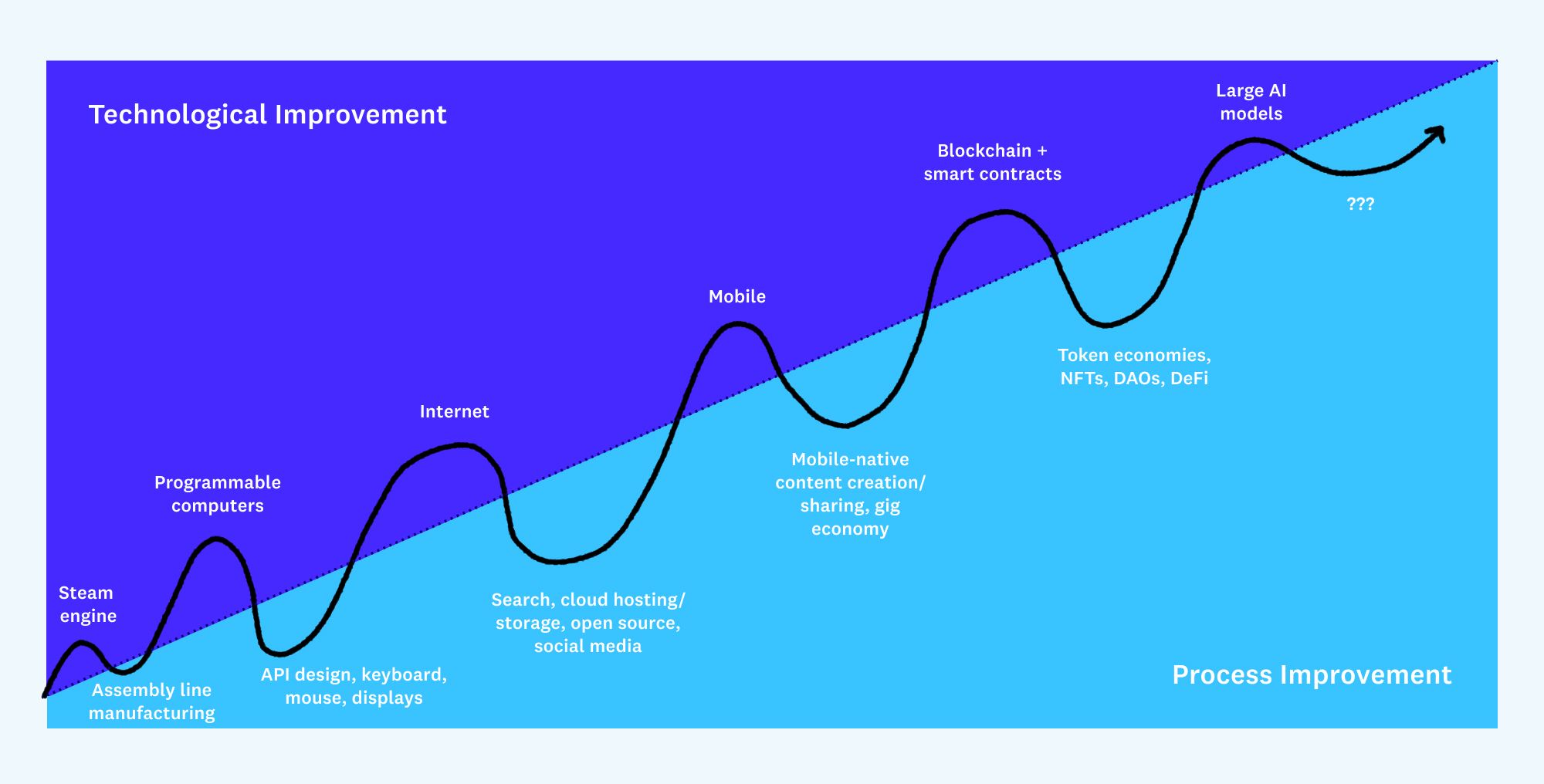

As we saw in Part 1, it’s possible to get started on a number of important problems by building augmentation infrastructure around the strengths of an artificial intelligence model. Does the model generate text? Build around text. Can it accurately predict 3D structures? Build around 3D structures. But taking an artificial intelligence system completely at face value comes with its own limitations.

Douglas Engelbart used the term co-evolution to describe the way in which humanity’s tools and its processes for using those tools adapt and evolve together. Models like GPT-3 and DALL-E represent a large step in the evolution of tools, but it’s only one half of the equation. When you build around the model, without also building new tools and processes for the model, you’re stuck with what you get. The model’s weaknesses become your weaknesses. If you don’t like the result, it’s up to you to fix it. And since training any of the large, complex AI systems we’ve discussed so far requires massive data and computation, you likely don’t have the resources to change the model all that much.

This is a bit of a conundrum: On the one hand, we don’t have the resources to change the model significantly. On the other hand, we need to change the model, or at least come up with better ways of working with it, to solve for our specific use case. For prompt-based models like GPT-3 and DALL-E, the two easiest ways to tackle this fixed-model conundrum are prompt-hacking and fine-tuning — neither of which are particularly efficient:

- Prompt-hacking: GPT-3 and DALL-E operate over natural-language prompts — given a descriptive sentence (or even a series of input/output examples, in the case of GPT-3), the model generates one or many outputs. The strength of the model’s results depend a lot on this prompt, so what happens when the output doesn’t meet your expectations or the needs of your application? You can just try a new prompt! This trial-and-error approach is known as prompt-hacking.

- Fine-tuning: Notice that earlier I said, “You likely don’t have the resources to change the model all that much,” not, “You likely don’t have the resources to change the model at all.” As it turns out, it is possible to change the model a little bit through a process called fine-tuning. If you have some use case-specific data, you can feed it to the model and the model becomes better tailored to your application and is more likely to be accurate (i.e., it is fine-tuned to your application). Although fine-tuning is an improvement over the blind search of prompt-hacking, it requires data, which usually means finding enough good data points and labeling them manually.

The goal of augmented intelligence is to make manual processes like these more efficient so humans can spend more time on the things they are good at, like reasoning and strategizing. The inefficiency of prompt-hacking and fine-tuning show that the time is ripe for a reciprocal step in process evolution. So, in this section, we’ll explore some examples of a new theme — building for the model — and the role it plays in creating more effective augmentation tools.

In-game assets, on demand

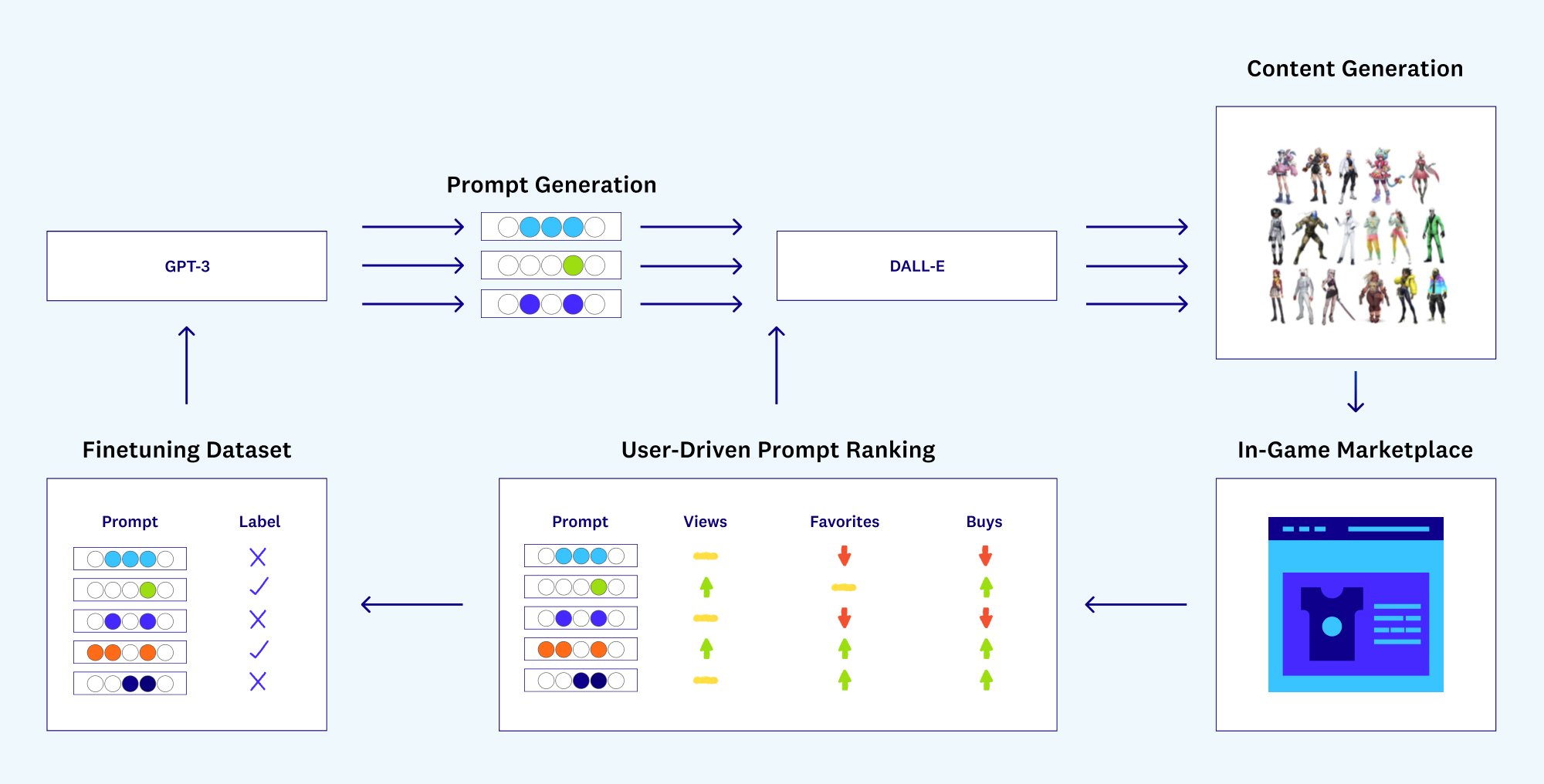

As a working example, let’s say you’re an up-and-coming game developer working on the next online gaming franchise. You’ve seen how games like Call of Duty and Fortnite have created massively successful (and lucrative) marketplaces for custom skins and in-game assets, but you’re a resource-constrained startup. So, instead of developing these assets yourself, you offload content generation to DALL-E, which can generate any number of skins and asset styles for a fraction of the cost. This is a great start, but prompt-hacking your way to a fully stocked asset store is inefficient.

To make things less manual, you can turn prompting over to a text generation model like GPT-3. The key to the virality of a game like Fortnite is the combination of a number of key game assets — weapons, vehicles, armor — with a variety of unique styles and references, such as eye-catching patterns/colors, superheroes, and the latest pop culture trends. When you seed GPT-3 with your asset types, it can generate any number of these combinations into a prompt. Pass that prompt over to DALL-E, and out comes your skin design.

This GPT-3 to DALL-E handoff sounds great, but it only really works if it produces stimulating, high-quality skin designs for your users. Combing through each of the design candidates manually is not an option, especially at scale. The key here is to build tools that let the marketplace do the work for you. Users flock to good content and have no patience for bad content — apps like TikTok are based entirely on this concept. User engagement will therefore be a strong signal for which DALL-E prompts are working (i.e., leading to interesting skin designs) and which are not.

To let your users do the work for you, you’ll want to build a recursive loop that cross-references user activity with each prompt and translates user engagement metrics into a ranking of your active content prompts. Once you have that, normal A/B testing will automatically surface prompt insights and you can prioritize good prompts, remove bad prompts, and even compare the similarity of newly generated prompts to those you have tested before.

But that’s not all — the same user engagement signal can also be used for fine-tuning.

Let’s move one more step backward and focus on GPT-3’s performance. As long as you keep track of the inputs you are giving to GPT-3 (asset types + candidate themes), you can join that data with the quality rankings you have just gotten from further down in your content pipeline to create a dataset of successful and unsuccessful input-output pairs. This dataset can be used to fine-tune GPT-3 on game-design-focused prompt generation, making it even better at generating prompts for your application.

This user-driven cyclical pipeline helps DALL-E generate better content for your users by surfacing the best prompts, and helps GPT-3 generate better prompts by fine-tuning on examples generated from your own user activity. Without having to worry about prompt-hacking and fine-tuning, you are free to work on bigger-ticket items, like which assets are next in the pipeline, and which new content themes might lead to even more interesting skins down the road.

Personalized learning experiences

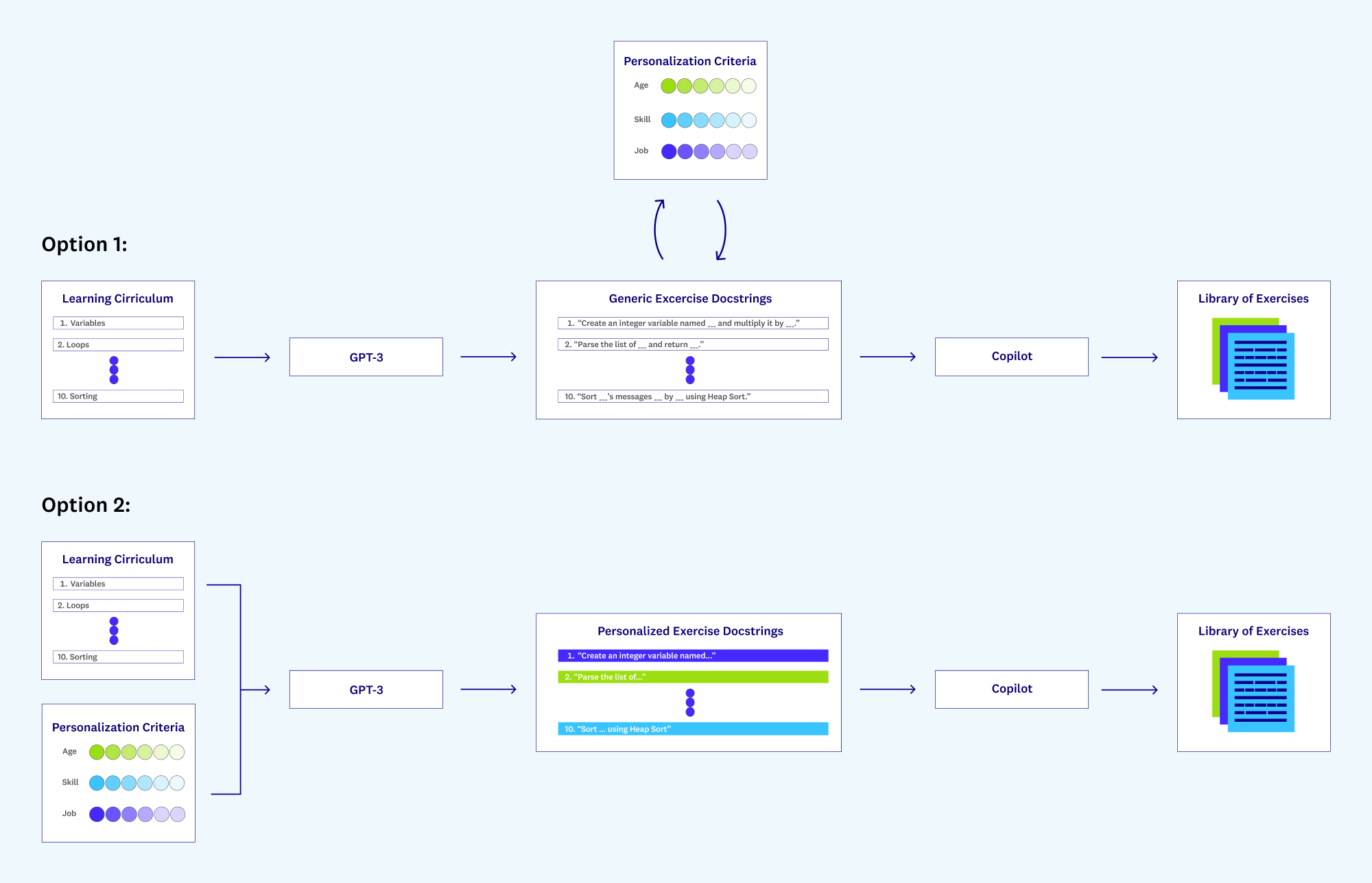

There also exists a huge opportunity to build middleware connecting creative industries and creative, personalized content-generating models. AI models and the services they enable (e.g. Copilot) could help for use cases that require novel content creation. This, again, requires using our understanding of the AI system and how it works to think of ways in which we can modify its behavior ever so slightly to create new and better experiences.

Imagine you are building a service for learning to code that uses Copilot under the hood to generate programming exercises. Out of the box, Copilot will generate anywhere from a single line of code to a whole function, depending on the docstring it’s given as input. This is great — you can construct a bunch of exercises really quickly!

To make this educational experience more engaging, though, you’ll probably want to tailor the exercises generated by Copilot to the needs and interests of your users. For example, you might want to personalize across dimensions such as:

- Skill level: This one is obvious. The exercises should be within the Goldilocks zone of the user’s current capabilities — not too easy, not too hard. (Consequently, this is also what’s referred to as the Flow Zone — as in, the mixture of task difficulty and user capability that is most likely to get the user to enter a state of flow.)

- Age range: If the user is a child, examples filled with superheroes and sports figures might be most exciting. If the user is an adult, cute examples might not be as appealing as, say, current affairs or pop culture references.

- Intended occupation: Lots of people do learn to code in order to get a new job. Being able to work on programming problems related to, say, finance or security might make for a more fulfilling learning experience for a future financial or security engineer.

Generating docstrings yourself is tedious and manual, so personalizing Copilot’s outputs should be as automated as possible. Well, we know of another AI system, GPT-3, that is great at generating virtually any type of text — so maybe we can offload the docstring creation to GPT-3.

This can be done in one of two ways. One approach is to ask GPT-3 to generate generic docstrings that correspond to a particular skill or concept (e.g., looping, recursion, etc.). With one prompt, you can generate any number of boilerplate docstrings. Then, using a curated list of target themes and keywords (a slight manual effort), you can replace variable names in the boilerplate to your target audience. Alternatively, you can try feeding both target skills/concepts and themes to GPT-3 at the same time and let GPT-3 tailor the docstrings to your themes automatically.

The success of this idea, of course, comes down to the quality of GPT-3’s content. For one, you’ll want to make sure the exercises generated by this GPT/Copilot combination are age-appropriate. Perhaps an aligned model like InstructGPT would be better here.

The path forward

We are now over a decade into the latest AI summer. The flurry of activity in the AI community has led to incredible breakthroughs that will have significant impact across a number of industries and, possibly, on the trajectory of humanity as a whole. Augmented intelligence represents an opportunity to kickstart this progress, and all it takes is a slight reframing of our design principles for building AI systems. In addition to building models to solve problems, we can think of new ways to build infrastructure around models and for models; and even ways in which foundation models might work together (like GPT-3 and DALL-E or GPT-3 + CoPilot).

Maybe one day we will be able to offload all of the “dirty work” of life to some artificial general intelligence and live hakuna-matata style, but until that day comes we should think of Engelbart — focusing less on machines that replace human intelligence and more about those that are savvy enough to enhance it.