Kim Lewandowski is co-founder and head of product at security startup Chainguard. Prior to Chainguard, she worked as a product manager at Google, most recently with a focus on securing the company’s development pipeline and the open source tools it used.

In this interview, she discusses the concept of a secure software supply chain and why the idea has caught on so strongly over the past few years. She also addresses the challenges that come with trying to balance developer productivity against the need to vet open source components, and the need for large enterprises to support the open source the open source tools they use in the name of security and project sustainability.

FUTURE: You worked on a lot of projects during your time at Google, but one of the more relevant ones for the purpose of this discussion is Tekton. Can you explain Tekton briefly?

KIM LEWANDOWSKI: Tekton is an open source project. It’s a CI/CD framework and a very pluggable system for helping developers with the source-to-deploy pipeline. While starting Tekton, that’s when I heard a lot of customers in a lot of companies asking about security; it was always top of mind for everyone involved.

And then came the opportunity to help with protecting Google from open source security threats. There was a really natural segue from doing the actual CI/CD implementation pipeline piece, and then thinking about that through the security lens. Not just the vulnerabilities piece, but a whole smattering of risks that companies undertake when they start relying more heavily on open source.

So, basically the idea of a secure software supply chain. How would you define that for someone who’s not up to speed on that discussion?

Some people hear the phrase and think it’s about the physical supply chain — manufacturing, distribution, and things — and it actually is similar. Think about how products, like our laptops, are produced: There are so many different steps and stages and parts that are going into the end result. When you think about the software supply chain and a piece of software like Zoom, for instance (which we’re using now), underneath that is hundreds, if not thousands, of developers that contributed code that ended up in the final product. And then it gets even more complicated, because the final product is made up of so many other dependencies.

And there are other types of supply chain attacks, like developer impersonation or project takeover. It’s a little bit more holistic than just thinking about a vulnerability in the code or something.

When you start to look at that whole picture — how the software went from a developer writing code all the way to the end — there are weaknesses along every link and stage in that pipeline. All the dependencies, all the things used to build the software, and all the pieces in between are what we’ve been referring to as the software supply chain.

Why is it the topic of conversation now? What changed about the way apps are developed and the way systems are built and architected that is making people pay attention to the various piece that comprise their applications?

I think about it kind of like the pandemic that’s currently going on. There’s one big incident, and everyone scrambles and is paying attention to it and whittles off a little bit, and then something else happens and it’s a similar story. So I don’t think the problem is new per se, but we have been seeing this huge uptick of attacks over the last few years. For supply chain attacks, I think the latest number I’ve seen is a 650% increase in 2021.

There are a lot of different reasons. One reason that I’ve heard is that we’ve gotten better at protecting other things in technology, so this is the next weakest link that attackers have grasped onto. And open source has grown in adoption and it’s bringing in a whole source of different risk vectors that we haven’t done a great job of securing against. And just the economics of it — with some of these attacks that we’ve seen lately, like the Log4j exploit, the attackers are getting a lot of bang for their buck.

But it’s not exactly a new idea: The one paper that’s always referenced is Ken Thompson’s Reflections on Trusting Trust, from 1984, which explains how you could backdoor into a compiler and then do lots of malicious things through that.

All the dependencies, all the things used to build the software, and all the pieces in between are what we’ve been referring to as the software supply chain.

What is the role of open source in all of this? You’ve mentioned it as a security risk, but there is a line of argument that open source is more secure, because you have all those extra eyes on it …

Arguments can be made either way, but I think the reality — and this is how it should be — is that open source really makes up the majority of our software applications today. And what this does is allow companies to innovate and speed up production of what they’re trying to do. But with that comes risk.

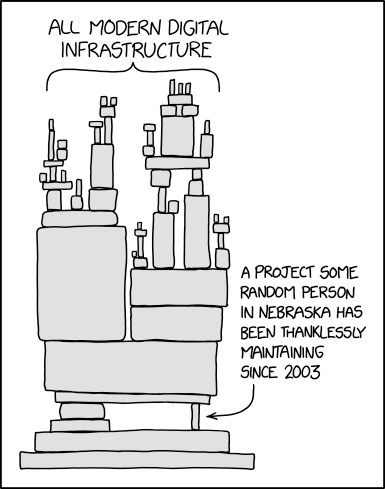

When we talk about open source security risks, there are lots of open source projects — many of them that companies rely on, or that a developer will just find and use because it does what it needs to do — that are not well supported or well maintained. There’s not always a big enterprise company behind these things. There’s an xkcd image that keeps getting passed around, and it’s all this modern infrastructure and architecture being held up by this tiny, little block that’s maintained by some lonely developer in Nebraska. That’s kind of really the situation.

So, while open source is amazing and it’s pushed us all further along in technology and everything, it comes with risk around unsupported projects, not knowing how they’re being maintained, what best practice they’re following, and that type of thing.

How much the software supply chain conversation really is about containers, and the now very prevalent practice of packaging applications and tools as containers?

Well, that’s what we’re focusing on from my company’s point of view, because that’s where we have the most expertise right now. And container development, in general, is just where people are moving. Unfortunately, I’ve seen that security has been pushed even further down the priority list as folks move to containerized app development. If I’m a developer, I’m not a security developer — I need to push a feature out the door, I need to get my product built. If there’s some open source package that does image-editing or video-editing that I need, I might just grab that instead of writing the code from scratch, not even thinking twice.

Aside from carefully vetting the container images you’re pulling, what’s the answer there? If you look at Docker Hub, for example, there are reports saying that some not-insignificant percentage of images have vulnerabilities. How do you vet them all?

It’d be interesting to hear what your description of careful vetting is, because I think it starts there. It’s just not reality that the people pulling in these packages, or containers, are doing a line-by-line analysis of the code. In Docker Hub, there’s no way to even trace back where the source code came from that was put into the container.

Going back to the physical supply chain analogy, you’ve got to be able to trace these things back to their origin, for starters: Who kind of developed them? What engineers are putting code into them? How was it built? What packages and what dependencies went into it? Having an understanding of these pipelines, and evidence of relevant information along the way, will allow you to make better trust decisions than you can today.

Security has been pushed even further down the priority list as folks move to containerized app development. If I’m a developer, I’m not a security developer — I need to push a feature out the door, I need to get my product built.

It kind of sounds like a case for standardization within organizations, and making sure that different teams and different developers aren’t all building snowflakes or following disparate guidelines.

Exactly. And that’s where we’re seeing big enterprises struggling today, because we all know the SolarWinds incident was something that attacked the build system, specifically. So now companies are taking a more scrutinized look at how the code is being built even internally. This isn’t just open source risk that we’re talking about — insider risk is a real thing that large companies need to worry about.

It’s usually the Wild West, because companies don’t want to get in the way of how their developers are getting their jobs done. So they’re like, “Hey, devs, go take any tool, go use any build system you need to get your job done.” But then from a security standpoint, that becomes a nightmare. There’s one company I talked to that’s got like 120 different build systems. Imagine manufacturing, say, a medicine in 120 different factories that you don’t understand anything about.

How are individuals or companies supposed to square that circle, where on the one hand, we say developer productivity is job number one, and on the other hand we say security is job number one?

I think the first thing is awareness. There’s still not broad awareness of the risks that are out there and how people are thinking about these things, even at more executive level. I don’t know if people are completely aware of the different threat models that are involved here, just as a starting place. I do think the answer here is making developer-friendly tools in different technologies that make this less difficult. Chainguard’s mission is to make the software supply chain secure by default, because we think the only way you can get developers to adopt more secure practices and more secure tooling is to make sure you’re not getting in their way — make it super easy for them.

I just got off a call about an open source thing we’re working on for developers to cryptographically sign Git commits that doesn’t get in their way at all. You’re able to sign your code commit with your credentials, and then people can verify on the other side, basically. That’s one example of how a developer can start being more secure without lots of things getting in their way.

Now, this does put a little bit more onus on developers because you have to log in and do an identity flow, but the economics show that if you catch things earlier, you’re not paying so much for them on the other side.

However, the way we’ve been looking at the problem is that it’s not only shifting left, it’s shifting all the way right, too. You’ve got to understand what the heck that you’re running in your production systems and be able to trace it back. That was a big thing we saw with the fallout of the Log4j incident: lots of companies not knowing if they’re impacted at all. So I think we do need things on both sides of the spectrum to help solve this problem.

Many organizations are scared to update, as they think everything will break. The downside of this is that they leave vulnerable software running, and when they do update many versions down the road, it’s a nightmare. We need to get organizations updating more frequently. Broadly, I think the key to all of this is the automation piece — to get out of developers’ way, and to shift left and right to make sure things are as automated as possible.

Shifting Left

Shifting left is the practice of performing testing, security checks, and other processes earlier in the software development lifecycle, in order to catch them early and avoid problems in the later stages.

If everything is so complex, why are so many people committed to microservices or cloud-native development or whatever you want to call it? Isn’t there an argument for dialing it back?

Maybe someone’s going to make that argument, but I’m not going to make that argument. In terms of what it’s done for us as a society, and the technology coming out of it now, I think containers just give us a new way to reason about these problems and to be able to catch up. And I don’t even know if it’s “catch up” because, to be honest, I’m not sure how good the tooling compares without the container stuff as we were doing with VMs and whatnot.

We think the problem’s tractable, I guess, but it’s just a lot of work, especially when you start talking about these open source projects that everyone depends on. Because they’re not supported by large enterprises; large enterprises might use them but, oftentimes, they’re not contributing back to the project or providing any sort of financial support.

Supporting open source projects is a big topic of discussion, but beyond better tooling, what can be done to improve the security aspect in particular?

It’s huge. Overall, I think it’s a number of things: I think it’s resources for these projects. I think it’s large enterprises just taking a better look at their inventory, the things they’re relying on, and hopefully contributing back where they’re taking dependencies on some of these projects. There are efforts to make more curated open source projects, where people know they’re more hardened.

I also do think that there’s been a lot of talk at the government level around the software supply chain, and introducing more regulation around it. So companies selling directly to the government will be required to do a certain number of things or meet certain compliance requirements. And then it just becomes another turtles all the way down problem, because those people selling to the government rely on their own vendors, who are using their own open source code. We’re just going to see it trickle down all the way, I think, over the next 5 or 10 years.

[CISOs] have a tremendous amount of pressure on them to make things more secure, but it’s hard for them to come down with a hammer across an entire organization and say, “You must start doing things this way!”

Can this have a California emissions standard type of effect, where if you’re building something to comply with one major market or buyer, everyone benefits?

This is a huge problem space, and I really believe that these big companies all coming together to lift the entire state of affairs is a good thing for everyone. So, any progress within open source communities or big open source projects like Kubernetes, I think is just awesome to see and I just hope that we pour more fuel on that fire over the next few years or so. Because I do think it’s not a strategic advantage for a lot of these companies, but it does protect them and does help lift everyone else up.

Who are you talking to, or what level of person are you talking to, within potential customers?

We’re pretty early — we launched in October and just announced our first product — but a lot of the folks that are coming to us are from regulated industries. Banks, healthcare, anyone in a regulated space — this is all top of mind for us. I have conversations with the CISOs and try to figure out what’s keeping them at night, and ask how they are going to approach software supply chain problems. And are they scared about new regulation? How are they going to meet that?

But then, ultimately, you’ve got to sell these things to developers, too, because they’re the ones using them at the end of the day. I think that’s where the champions come in — being able to demo and showcase different capabilities to developers and security teams, to wrap their heads around how this will actually work in their real production environments. It’s kind of like a sandwich model: The people here have the money, and the people down here are the ones doing the work.

I don’t know if I can envy the job of the CISOs at these companies. They have a tremendous amount of pressure on them to make things more secure, but it’s hard for them to come down with a hammer across an entire organization and say, “You must start doing things this way!” It’s how to find that balance between making things more secure and not pissing everyone off.

Many organizations are scared to update, as they think everything will break. The downside of this is that they leave vulnerable software running, and when they do update many versions down the road, it’s a nightmare.

What’s the future for what we might call “legacy” approaches to security, firewalls and antivirus programs and the like, in a world increasingly concerned with software supply chains, zero-trust, and these more modern methods?

In the case of firewalls, I think the reality is that we’re not all running things behind closed walls. There’s a lot of migration still to the cloud, in terms of infrastructure as well as SaaS, which means that you need a framework like zero-trust in order to validate access to data, resources, or whatever at the user level rather than just trusting everything on the network.

And let’s not forget that you’re still going to run open source software within all these firewalled environments. There are probably very few systems that exist today that are completely air-gapped and don’t use any open source or third-party code. If any of those components are vulnerable and exploited, intruders are already inside your network and the call is coming from inside the house.

My only hope is people start taking this stuff more seriously, start looking at their systems — where their risks are — and start adopting the best practices and stuff that’s coming down. Because I think there’s a lot of good stuff happening, especially in the open source communities, to help everyone’s lives a little bit more.

Views expressed in “posts” (including articles, podcasts, videos, and social media) are those of the individuals quoted therein and are not necessarily the views of AH Capital Management, L.L.C. (“a16z”) or its respective affiliates. Certain information contained in here has been obtained from third-party sources, including from portfolio companies of funds managed by a16z. While taken from sources believed to be reliable, a16z has not independently verified such information and makes no representations about the enduring accuracy of the information or its appropriateness for a given situation.

This content is provided for informational purposes only, and should not be relied upon as legal, business, investment, or tax advice. You should consult your own advisers as to those matters. References to any securities or digital assets are for illustrative purposes only, and do not constitute an investment recommendation or offer to provide investment advisory services. Furthermore, this content is not directed at nor intended for use by any investors or prospective investors, and may not under any circumstances be relied upon when making a decision to invest in any fund managed by a16z. (An offering to invest in an a16z fund will be made only by the private placement memorandum, subscription agreement, and other relevant documentation of any such fund and should be read in their entirety.) Any investments or portfolio companies mentioned, referred to, or described are not representative of all investments in vehicles managed by a16z, and there can be no assurance that the investments will be profitable or that other investments made in the future will have similar characteristics or results. A list of investments made by funds managed by Andreessen Horowitz (excluding investments for which the issuer has not provided permission for a16z to disclose publicly as well as unannounced investments in publicly traded digital assets) is available at https://a16z.com/investments/.

Charts and graphs provided within are for informational purposes solely and should not be relied upon when making any investment decision. Past performance is not indicative of future results. The content speaks only as of the date indicated. Any projections, estimates, forecasts, targets, prospects, and/or opinions expressed in these materials are subject to change without notice and may differ or be contrary to opinions expressed by others. Please see https://a16z.com/disclosures for additional important information.